Why I don't use libraries for Multi-LLM projects

Dont get stuck using the wrong libraries in an AI projects

If you are starting a project where you need to use multiple LLMs, it is tempting to try and find a library that helps you easily swap these out. I have used LiteLLM in the past, but more for its load balancing features than the ability to "swap out" different LLM services.

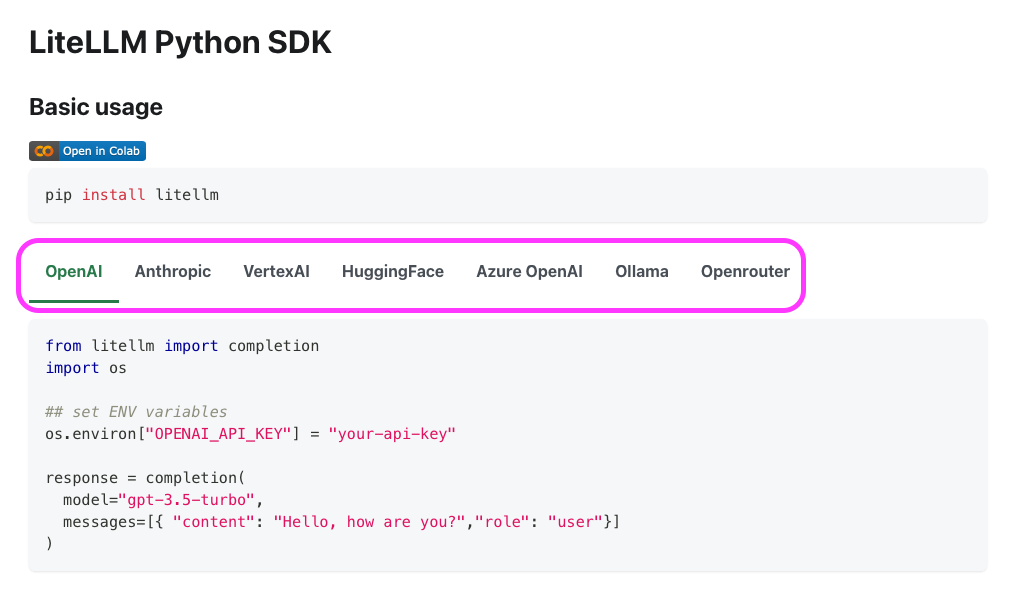

It might be tempting to use a platform like this to try and swap out service based on lets say cost. It usually starts well when using basic functionality like chat completions. If this is a simple project, then this is a great tool and will let you swap between OpenAI, Anthropic, VertexAI, HuggingFace, Azure OpenAI, Ollama and Openrouter, just by changing a couple of lines of code.

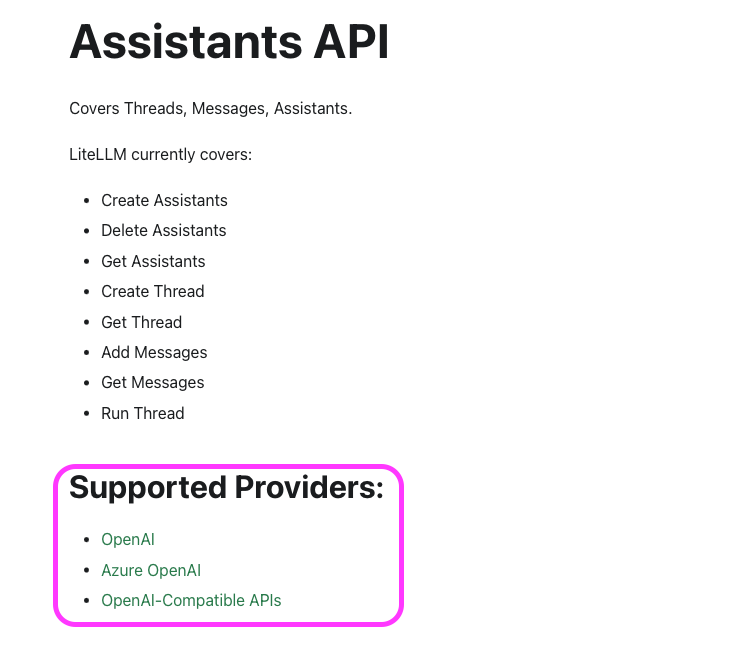

But as you continue to build out your application, you inevitably end up using more complex functionality e.g. Open AI features such as structured output or the assistant api or Anthropic's computer use and bash and file tools etc.

Once you bake this advanced functionality into your application, you can no longer "swap" out your LLM and the overlying library will not work if the underlying API's don't offer that functionality. Lets take the Assistants API - only OpenAI, Azure OpenAI and OpenAI-Compatible API's are supported. This means you can no longer just change a couple of lines of code to lets say swap your application to use an Anthropic model - your code will break.

For my current workflow, I stick to native tools right now as it's too early and things are moving too fast. Frameworks are being left behind or being tied down to one service. Its not too much effort to build you own small library of utilities which you can quickly and independently change as opposed to raising a CR on an open source repo which may never be addressed.

In summary, understand the business requirements, translate them and map them to the tools that are able to fulfil them, and build yourself a Swiss army knife of utils to easily swap.