Monitoring running costs of LLM Applications

Don't let your LLM costs spiral out of control

Why do you need Monitoring?

The backbone of your LLM applications need to be monitoring, traces, evals, prompt management and metrics. If you cannot measure, you dont know where to cut! These are the foundations that allow you to debug and improve your LLM application. There are now several platforms you could use to do this - I currently tend to use either LangFuse or Microsoft AI trace and eval tools. You just needt to pick one and use it - and by use it, you really need to use it.

About 2 years ago, there was an obsession with monitoring every token due to the small context windows and the high cost per token which seems to have now taken a back seat. There were also very few AI libraries at that time which assisted with development, but with the emergence of llm development frameworks, more and more concepts were abstracted and done "under the hood". And no one usally checks under the hood unless they are problems.

As more and more of AI applications are built and pushed into production in 2025, then utlization costs will become an item with will begin to be scrutinized. With the rise of concepts such as chain-of-thought, reasoning and retries etc, they could be several calls made to the LLM before it produces a result. The purpose is not to go into detail on the concepts, but will give you an example of how costs could easily increse by multiples by not leveraging the right tool for the job.

Understand the impact of libraries

The python code snipet below is going to extract JSON from the string "Extract jason is 25 years old" which should return the following JSON:

{"name":"Jason","age":25}I use the Instructor library which enables me to set a number of retries to the LLM to generate the correct response. I also use Pydantic to define the object properties and in this case I want the name to be in upper case. I have this hooked up to be observed by langfuse using the decorator on the function get_response_instructor().

class UserDetails(BaseModel):

name: str

age: int

@field_validator("name")

@classmethod

def validate_name(cls, v):

if v.upper() != v:

raise ValueError("Name must be in uppercase.")

return v

@observe()

def get_response_instructor():

# Apply the patch to the Azure OpenAI client

client = instructor.from_openai(AzureOpenAI(

azure_endpoint=os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version=os.getenv("AZURE_OPENAI_API_VERSION"),

), mode=instructor.Mode.TOOLS)

resp = client.chat.completions.create(

model="gpt-4o",

response_model=UserDetails,

max_retries=2,

messages=[

{"role": "user", "content": "Extract jason is 25 years old"},

],

)

return resp

instructor_response = get_response_instructor()

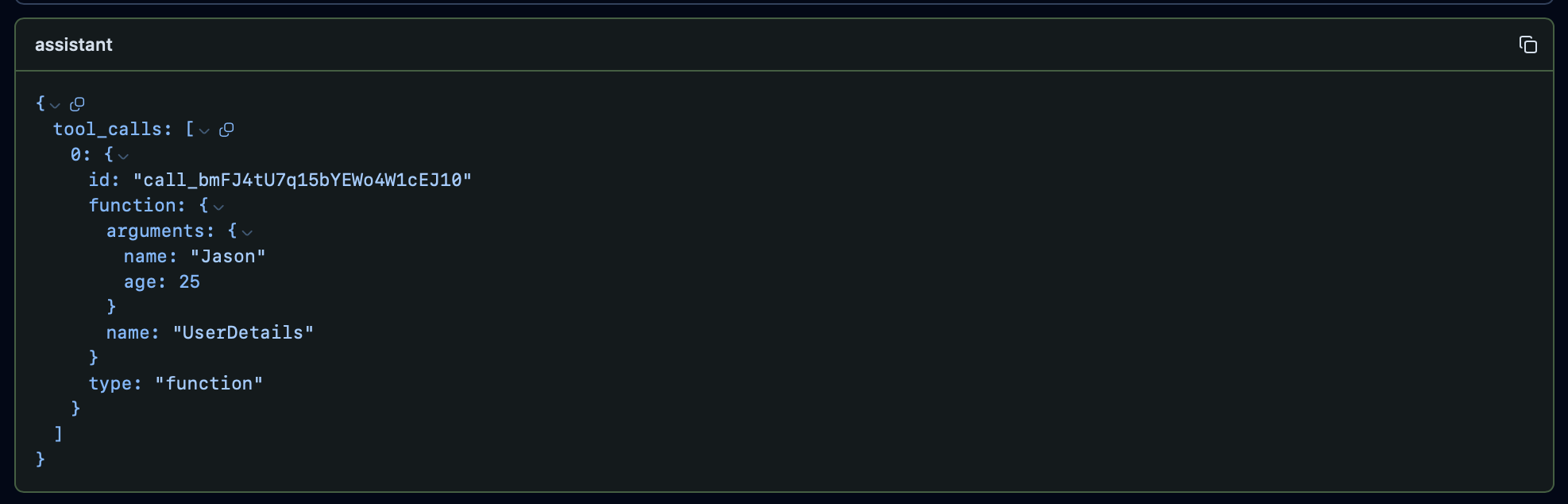

Once I execute this and take a look at the trace from langfuse, I see the following calls returned by the assistant. The first was in lowercase:

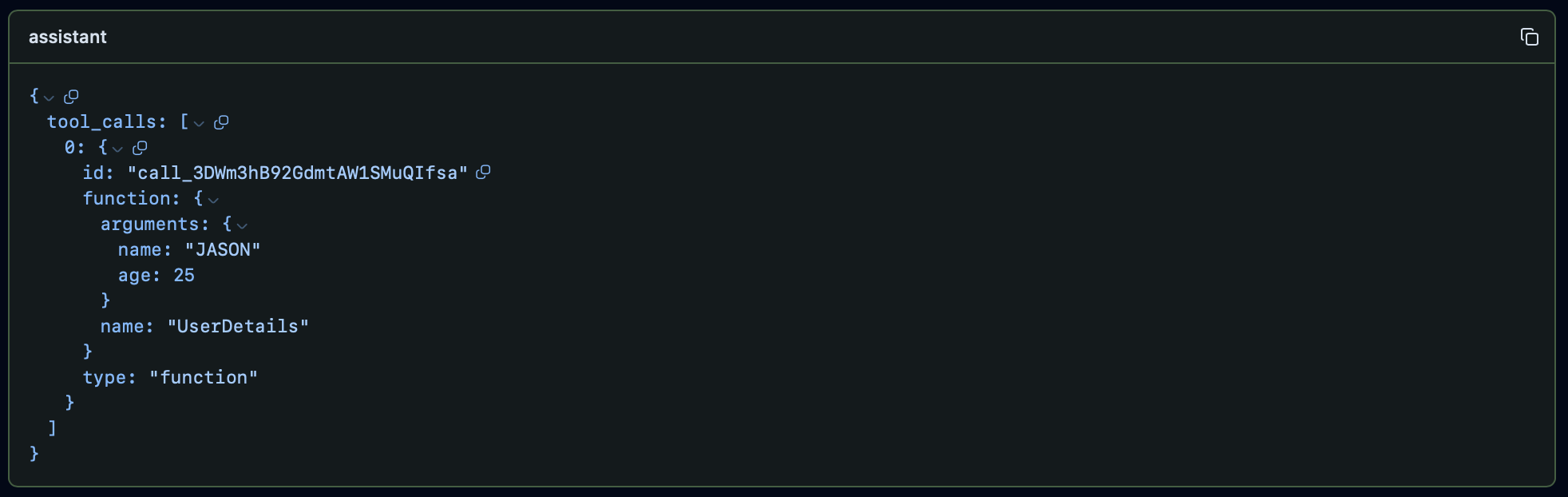

This then followed a second retry with the syntax error from Pydantic and it now returns the name in uppercase;

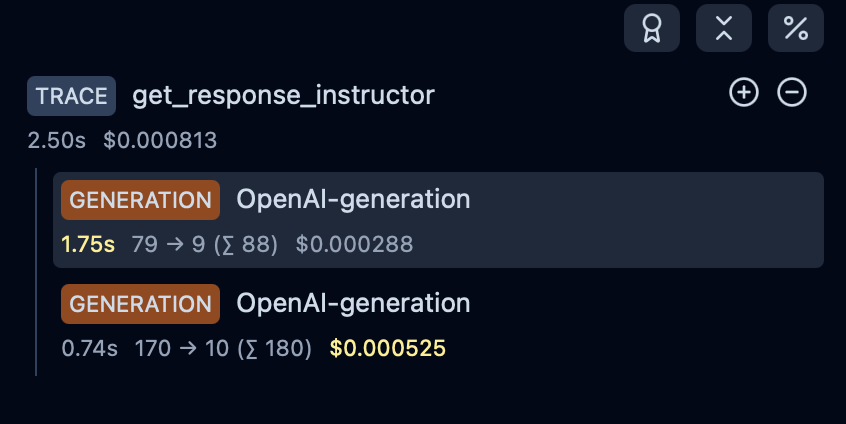

What does this look like in cost - it cost $0.000813 for the call broken down into $0.000288 for the first call and $0.000525 for the second call. Why was did the second call cost more? It's because it sent the error message from Pydantic with the prompt and thus more tokens to get the correct response.

Cost effective options

Right, how does that compare with just a native call to without asking for the text to be in uppercase. The code snipet below shows the call which still returns JSON as I leveraged OpenAI Structured Ouputs.

class User(BaseModel):

name: str

age: int

@observe()

def get_response_without_instructor():

# Apply the patch to the Azure OpenAI client

client = AzureOpenAI(

azure_endpoint=os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version=os.getenv("AZURE_OPENAI_API_VERSION"),

)

resp = client.beta.chat.completions.parse(

model="gpt-4o",

response_format=User,

messages=[

{"role": "user", "content": "Extract jason is 25 years old"},

],

).choices[0].message.content

return resp

without_instructor_response = get_response_without_instructor()

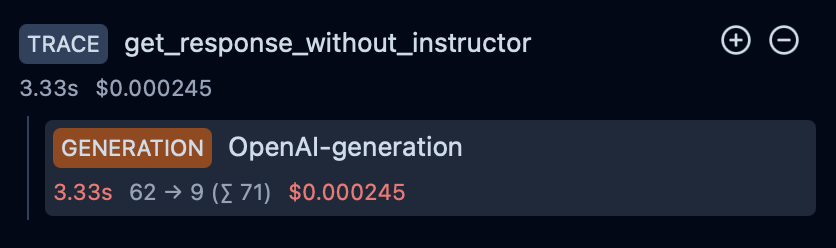

This code returns the JSON that I need, but the name is not returned in uppercase - will take about that in a little bit as I want to focus on the cost. So what did it cost us? It cost $0.000245 for the single call to the LLM!!!. The first version cost us over 3 times (3.318 times to be exact). It may seem insignificant when you are looking at one single call, but when you are making millions of LLM calls, your app could be costing 3 times as much.

Where did it go wrong? Lets get back to the uppercase. LLM's have strengths and weaknesses - by default the LLM will most likely return mixed case for the name i.e. Jason as thats what makes most sense. Once this is returned, the Instructor library will check if it satisfies the Pydantic criteria. If it doesnt, it takes any of the error messages from Pydanticc and passes them into the prompt again. In this instance, the error message specified the name should be in upppercase. This is the second call which then produces the uppercase name i.e. JASON.

How about the second instance? For the second instance where we leverage structured output to ensure we get JSON, we get a response with the name in lowercase i.e. Jason. But we can easily use python to get uppercase by using the upper string method which will not only be faster, but have 100% certainty.

Conclusion

When you integrate AI into your applications, you should only use calls to LLM where traditional programming would have major difficulties e.g. getting intent from a sentence, but it would be really easy for an LLM to do. Things like calculations, case conversions, string manipulations etc should be done using the programming language and additionaly good libraries and not by the LLM. You also need to understand what third party libraries and frameworks and doing under the covers. The Instructor library for instance is a very useuful library when used with Pydantic, but you need to understand how to get the best out of it and be when you leverage a different cost effective mechnism when necessary.

If your costs seem to be spiralling out of control, you may want to check your LLM, libraries and frameworks are being used in the right way by leveraging a monitoring platform you can check against.